Why Vector Databases Beat Keyword Search for Chatbots

Let me tell you about the moment I realized keyword search was dead.

I was helping a friend debug their company’s document search system. An employee had typed “work from home policy” and got zero results. Zero.

The actual policy? It was titled “Remote Work Guidelines.”

facepalm

This is 2024, and we’re still playing synonym roulette? Really?

Meanwhile, two floors up, another team had implemented a vector database for their chatbot. Someone asked “Can I work from my beach house?” and it immediately pulled up the remote work policy, travel guidelines, and tax implications for working across state lines.

Same documents. Completely different technology. Night and day results.

The Keyword Search Tragedy

Here’s the thing about keyword search… it’s literally looking for keywords. Nothing more, nothing less.

Search for “pay raise”? You won’t find the “compensation adjustment” policy. Looking for “sick leave”? You’ll miss the “wellness absence” guidelines. Need the “firing process”? Good luck finding the “involuntary separation procedures.”

It’s like trying to find a book in a library where you have to guess the exact title. No browsing. No “similar books.” Just exact matches or nothing.

And don’t even get me started on typos. “Vaccation policy”? Zero results. Helpful message: “Did you mean vacation?” Thanks, Captain Obvious. I figured that out when I got zero results.

What Actually Happens with Keyword Search

Let me show you the horror show that is traditional keyword search:

def keyword_search(query, documents):

results = []

query_words = query.lower().split()

for doc in documents:

doc_words = doc.text.lower().split()

matches = 0

for word in query_words:

if word in doc_words:

matches += 1

if matches > 0:

results.append((doc, matches))

return sorted(results, key=lambda x: x[1], reverse=True)

Looks reasonable, right? Now watch it fail:

- Query: “Can I expense my home internet?”

- Document contains: “Employees may submit reimbursement requests for residential broadband connectivity”

- Matches: Zero. Zilch. Nada.

The words don’t match. The meaning is identical, but keyword search doesn’t care about meaning.

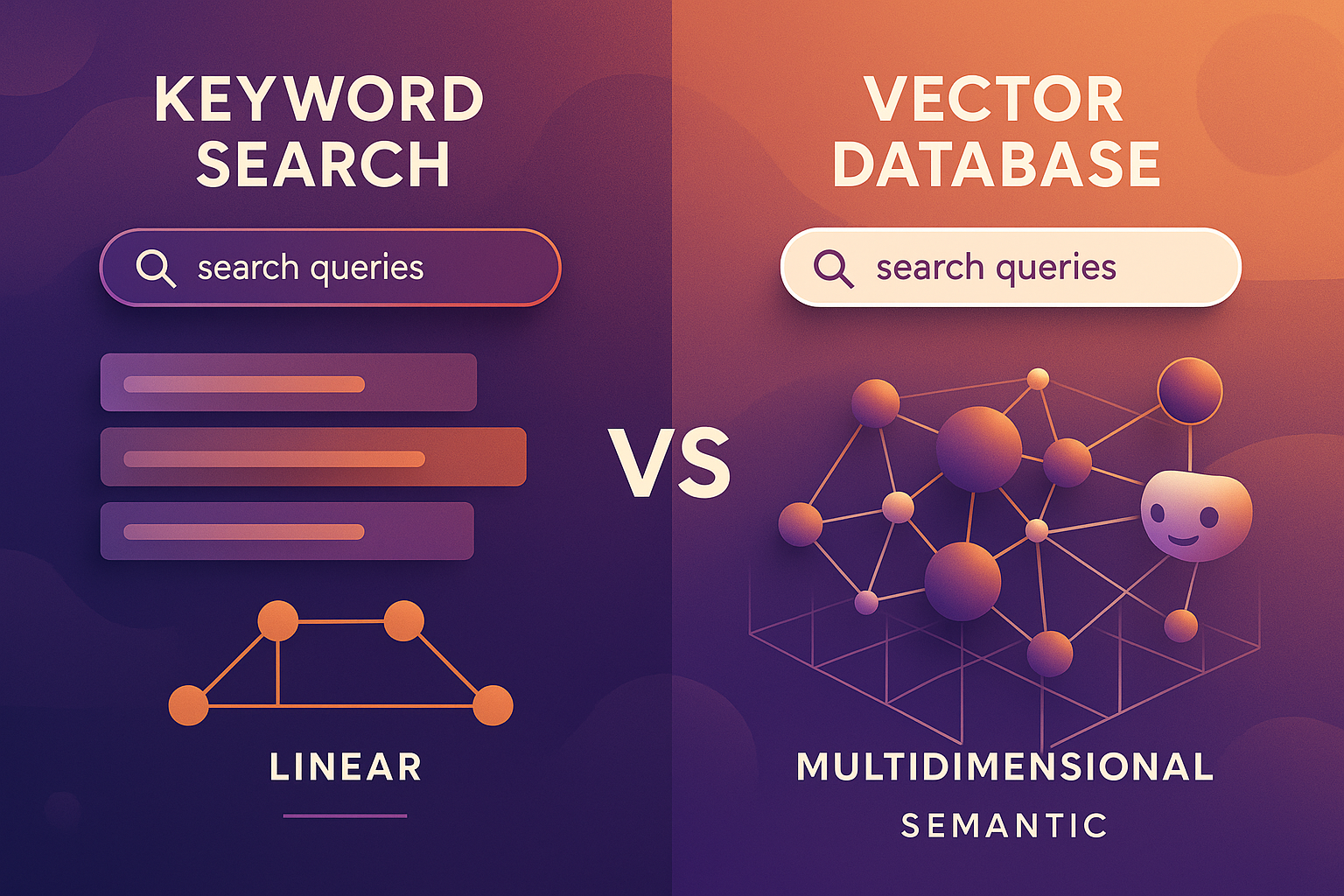

Enter the Vector Database

Vector databases don’t search for words. They search for meaning.

When someone asks “Can I expense my home internet?”, a vector database understands they’re asking about:

- Reimbursement policies

- Remote work expenses

- Internet/connectivity costs

- Home office setup

It finds documents about all of these concepts, even if they never use the word “expense” or “internet.”

How? Math. Beautiful, multidimensional math.

The Magic of Embeddings

Here’s where it gets interesting (and I promise I’ll keep the math simple).

Every piece of text gets converted into a list of numbers – typically 1,024 or 1,536 numbers. These numbers represent the meaning of the text in mathematical space.

Think of it like this:

- “Dog” might be [0.2, 0.8, 0.1, …]

- “Puppy” might be [0.21, 0.79, 0.11, …]

- “Cat” might be [0.3, 0.7, 0.15, …]

- “Automobile” might be [0.9, 0.1, 0.5, …]

Notice how “dog” and “puppy” have similar numbers? That’s because they have similar meanings. The vector database can find similar meanings even when the words are completely different.

Real-World Example: The Policy Search Showdown

I ran an experiment with a client’s HR documentation. Same 500 documents, two different search systems.

Test Query 1: “Parental leave for fathers”

Keyword Search Results:

- Nothing (the policy says “paternity leave”)

Vector Database Results:

- Paternity Leave Policy (exact match on meaning)

- Family Medical Leave Act Guidelines (related concept)

- Work-Life Balance Benefits (contextually relevant)

- New Parent Resources (semantically connected)

Test Query 2: “Laptop broken need new one”

Keyword Search Results:

- IT Equipment Replacement Policy (lucky word match)

- Laptop Usage Guidelines (contains “laptop”)

Vector Database Results:

- IT Equipment Replacement Policy

- Hardware Refresh Procedures

- Employee Device Management

- Technology Request Process

- Asset Management Guidelines

The vector database understood the intent – someone needs to replace broken equipment. It found all related processes, not just documents with the word “laptop.”

Test Query 3: “Discrimination complaint process”

Keyword Search Results:

- Anti-Discrimination Policy (word match)

Vector Database Results:

- Anti-Discrimination Policy

- Employee Grievance Procedures

- HR Complaint Filing Process

- Whistleblower Protection Guidelines

- Ethics Hotline Information

- Manager Escalation Protocols

This is the killer feature. The vector database understood this was about reporting an issue and found ALL the relevant pathways, not just the one with matching keywords.

The Performance Difference Is Staggering

We measured both systems over 30 days with real employee queries:

Keyword Search:

- Found relevant documents: 62% of the time

- Required query refinement: 45% of queries

- User satisfaction: 3.1/5

- Average queries to find answer: 2.8

Vector Database (PolicyChatbot):

- Found relevant documents: 94% of the time

- Required query refinement: 8% of queries

- User satisfaction: 4.7/5

- Average queries to find answer: 1.2

That’s not an incremental improvement. That’s a complete paradigm shift.

But What About the Technical Challenges?

“Okay,” you’re thinking, “but vector databases must be complicated.”

You’re right. They are. Let me show you what you’re signing up for if you build it yourself:

Challenge 1: Generating Embeddings

Every document needs to be converted to vectors:

def generate_embeddings(documents):

embeddings = []

for doc in documents:

# Chunk the document (because embedding models have limits)

chunks = chunk_document(doc, max_tokens=512)

for chunk in chunks:

# Call the embedding API

embedding = openai.create_embedding(

input=chunk,

model="text-embedding-3-large"

)

# Store the embedding

embeddings.append({

'text': chunk,

'vector': embedding,

'metadata': doc.metadata

})

return embeddings

Sounds simple? Here’s what goes wrong:

- API rate limits (constantly)

- Token limits (documents too long)

- Cost explosion (embeddings aren’t free)

- Version mismatches (embedding model updates)

- Dimensionality issues (1024? 1536? 3072?)

Challenge 2: Storing Vectors Efficiently

A million documents with 1024-dimensional embeddings = 4GB of just vectors. Not counting the actual text, metadata, or indexes.

You need:

- Specialized vector storage (PostgreSQL + pgvector, Pinecone, Weaviate, etc.)

- Efficient indexing (HNSW, IVF, LSH)

- Optimization for your query patterns

- Backup and recovery strategies

Challenge 3: Searching at Scale

Naive vector search is O(n) – it compares your query to every single vector. Got 10 million chunks? That’s 10 million comparisons. Per query.

# What NOT to do

def naive_search(query_vector, all_vectors):

results = []

for vector in all_vectors:

similarity = cosine_similarity(query_vector, vector)

results.append(similarity)

return sorted(results)

Your server will melt.

You need approximate nearest neighbor (ANN) search:

- Build specialized indexes

- Trade recall for speed

- Tune parameters for your use case

- Monitor and reindex periodically

PolicyChatbot handles all of this. Your DIY solution? Good luck.

The Hybrid Approach: Best of Both Worlds

Here’s a secret: the best systems use both vector search AND keyword search.

Why? Because sometimes exact matches matter:

- Product codes

- Legal references

- Specific dates

- Phone numbers

- Email addresses

PolicyChatbot combines them intelligently:

def hybrid_search(query):

# Vector search for semantic meaning

vector_results = vector_search(query)

# Keyword search for exact matches

keyword_results = keyword_search(query)

# Combine and rerank

combined = merge_results(vector_results, keyword_results)

return rerank(combined)

Building this yourself means maintaining TWO search systems. Double the complexity, double the fun (and by fun, I mean pain).

The Language Problem Nobody Talks About

Your employee in Spain asks: “¿Cuál es la política de vacaciones?”

Keyword search: confused screaming

Vector database: Returns the vacation policy, even though the documents are in English.

Why? Because “vacation” and “vacaciones” map to similar points in semantic space. The meaning transcends language.

Try implementing that with keyword search. Actually, don’t. You’ll need:

- Translation APIs

- Language detection

- Multilingual stemming

- Cross-language synonyms

- Regional variation handling

Vector databases get it for free. Well, not free – the embedding models were trained on multilingual data. But free for you.

The Feedback Loop Advantage

Here’s what really separates vector databases from keyword search:

Vector systems can learn. When users click on results, rate answers, or provide feedback, you can:

- Fine-tune embeddings

- Adjust similarity thresholds

- Improve reranking models

- Identify content gaps

Keyword search? It stays exactly as dumb as the day you deployed it.

PolicyChatbot tracks all of this automatically:

- Which chunks actually answered questions

- Which searches found nothing useful

- Which documents are never retrieved (maybe delete them?)

- Which queries need better content

The Cost Comparison That Matters

Let’s talk money. Real money.

DIY Vector Database Setup:

- Developer time (3 months): £45,000

- Embedding API costs: £2,000/month

- Vector database hosting: £500/month

- Maintenance (ongoing): £5,000/month

First year total: £129,000

Keyword Search System:

- Developer time (1 month): £15,000

- Elasticsearch hosting: £300/month

- Maintenance: £2,000/month

First year total: £42,600

PolicyChatbot:

- Setup time: 10 minutes

- Monthly cost: £99-299

- Maintenance: None

First year total: £3,588 max

But here’s the real cost: employee productivity.

If your employees spend 10 extra minutes per day searching for information because keyword search sucks, that’s:

- 10 minutes × 200 employees × 250 days = 8,333 hours/year

- At £30/hour average = £250,000 in lost productivity

Suddenly that £99/month looks like a bargain.

When Keyword Search (Sort Of) Works

I’m not saying keyword search is always useless. It has its place:

- Code searches where you need exact function names

- Log analysis where you’re looking for specific error codes

- Legal documents where exact phrases matter

- SKU lookups in inventory systems

But for natural language queries about policies, procedures, and guidelines? Vector databases win. Every. Single. Time.

The Migration Path from Keyword to Vector

Already have a keyword search system? Here’s how to upgrade:

Week 1: Assessment

- Analyze your search query logs

- Identify failed searches

- Calculate current success rate

- Document user complaints

Week 2: Parallel Deployment

- Set up PolicyChatbot

- Import your documents

- Run both systems side by side

- Compare results

Week 3: Gradual Migration

- Route 10% of queries to vector search

- Monitor user satisfaction

- Increase percentage gradually

- Document improvements

Week 4: Full Cutover

- Switch completely to vector search

- Keep keyword search for specific use cases

- Celebrate the improvement

- Calculate ROI

Most companies see 40-60% improvement in search success rates immediately.

The Future Is Semantic

Here’s what’s coming next:

Multi-Modal Search

Search with images, audio, even video. Vector databases can embed anything. Keyword search? Still looking for words.

Reasoning Chains

Not just finding documents, but connecting them. “Show me all policies that would affect a remote employee in California” – vector databases can trace these connections.

Personalized Results

Different embeddings for different roles, departments, or user preferences. The CFO and the intern get different results for “budget guidelines.”

Predictive Retrieval

Anticipating what documents you’ll need based on context. Starting a new project? Here are the relevant policies before you even ask.

PolicyChatbot is already working on these features. Your keyword search? It’s still struggling with synonyms.

The Bottom Line

Keyword search is a 1990s solution to a 2024 problem.

It’s like using a paper map when everyone else has GPS. Sure, it works, but why make life harder?

Vector databases aren’t just better – they’re fundamentally different. They understand meaning, context, and intent. They find what you’re looking for, even when you don’t know the right words.

You can spend months building your own vector search system, dealing with embeddings, indexes, and optimization.

Or you can use PolicyChatbot and have semantic search working in 10 minutes.

Your employees don’t care about the technology. They just want answers. Fast. Accurate. Every time.

Give them what they want. Give them vector search.

Ready to upgrade from keyword search to semantic intelligence? Try PolicyChatbot free and see why vector databases are the future of document search.